In this article, we are going to see much reason that why developers use YOLO for real-time object detection, why people referring YOLO for doing the same, what is real-time object detection, what is YOLO and its algorithm will mainly discuss convolution neural network or CNN model that is used to classify objects in an image.

We have seen that recently Tesla has launched the autopilot car i.e., the idea of the self-driving car, and you all must be wondering that how it really works, yes even the answer is something out of it. Some of the frequent answers are that they have used the technology of RADAR, LiDAR, GPS Localization, and many more. Nevertheless, the main concept is YOLO object detection.

Let’s move into the depth of this article.

What is Object Detection?

In order to understand this particular concept, we need to go through the concept of image classification, which works through various complexity.

Image classification classifies the image in one of the various categories, such as dogs, cats, humans, etc. The main point is that one image has only one category assigned to it, and whereas object detection allows us to detect the particular object in that object, i.e., how many types of objects are there and where it is, like that.

Let’s take an example of a self-driving car or autopilot car, it works in the same concepts, but instead of identifying only one object, it works in the concept of defining multiple objects such as traffic lights, humans, signs, location of other vehicles, and then it has to take right decisions based on the result of these.

The work of object detection is to identify objects in an image, i.e., it works as a tool, It also draws a rectangle box around the objects it identifies and if you want to draw the exact boundaries of the object, then that process is called as image segmentation.

Why developers use YOLO for real-time object detection?

YOLO object detection stands for “You Only Look Once” object detection, whereas most people misunderstood it as “You Only Live Once“. It is a real-time method of localizing and identifying objects up to 155 frames per second. In YOLO, the architecture splits the input image into m x m grid, and then further each grid generates 2 bounding boxes and the class probabilities of those bounding boxes. The main point to be noted here is that the size of the bounding box is larger than the grid size itself.

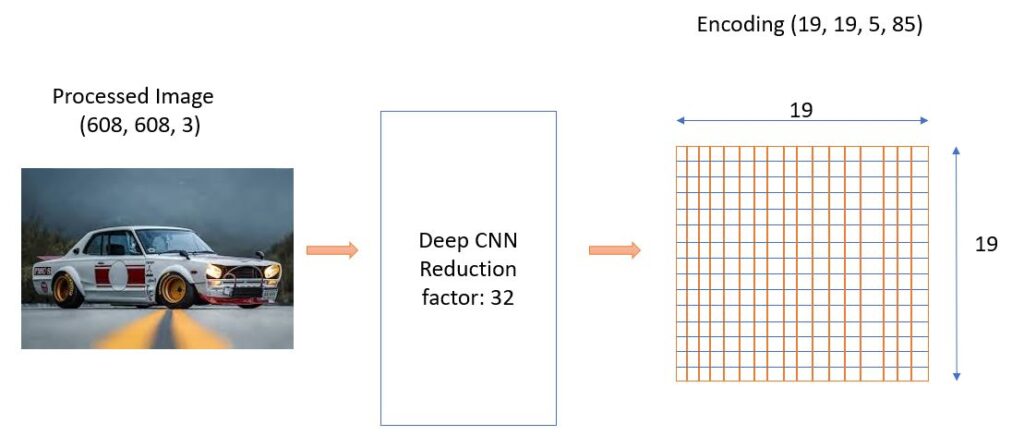

YOLO architecture is likely FCNN (Fully convolution neural network), that passes the image of n x n size, and when this passes through FCNN, the output we get is the image of m x m size.

It takes the image and the system divides that image into S x S grid, and now the work of the objects comes here, that if the center of the object falls in that grid, then the grid cell is only responsible for detecting that particular object.

How does the YOLO algorithm works?

It works by splitting the algorithm into two groups.

Algorithms based on regression

It predicts the bounding boxes and classes for the whole image in one run of the algorithm, instead of selecting the inserting parts only. The best example lying in this category is SSD (Single Shot Multibox Detector) and YOLO (You Only Look Once) family algorithms. It is used commonly for real-time object detection in general. There is a bit of accuracy for the largest speeds.

Algorithms based on Classification

In this category, it is implemented in two stages. First is that they select the interesting region of the image, and the second is that they classify that region using CNN i.e., convolution neural network. In this, the solution is slow because it executes the predictions of each selected region. The example which is widely used based on this category is RCNN (Region-based convolution neural network), another example while lies in this are RetinaNet.

In order to understand this algorithm, It is very necessary to know that what is being predicted actually. It mainly aims to predict the class of a particular object and the bounding box specifying the particular object location. Each and every bounding box can be simply described using four descriptors and that is:

- Its width (bw)

- Its height (bh)

- The center of a bounding box (bxby)

- The value c, that is corresponding to the class of a particular object (for example light, car, buses, etc.)

We also have to predict the probability (the pc value) that there is an object in the bounding box or not.

In simple, YOLO algorithms work by splitting a particular image into cells, typically it uses a grid of size 19 x 19, and each and every cell is responsible for predicting at least 5 bounding boxes and at last we arrive at a very large number of 1805 bounding boxes for only one image.

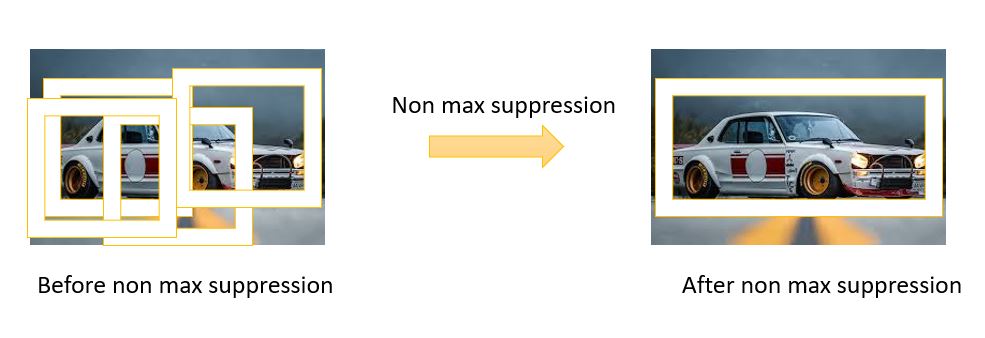

There will be no object in most of the bounding boxes and cells, there we have to predict the values of pc, which helps to remove the boxes with a low probability of containing the object and they process the boxes with the highest probability of containing the object and this process is called Non-max Suppression.

What are the advantages of YOLO Object Detection?

Although we have known a lot about YOLO, there are some advantages to this, let’s see what are they.

- Faster Speed: YOLO algorithms works comparatively faster as compared to another algorithm.

- It is a highly generalized network: Probably YOLO is a highly generalized network because of its algorithm and the way it is trained.

- It processes each frame at the rate of 45 fps which is a larger network to 150 fps which is a smaller network, which is better than real-time.

What are the disadvantages of YOLO Object Detection?

There must be some disadvantages also, and some of them are listed below:

- It is very difficult to detect small objects from the image.

- It is very difficult to detect objects which are very close to each other because of the grid.

- It has comparatively more localization and low recall error compared to faster RCNN.

I hope, you all got to know some very exciting topics and got deep knowledge on the same, and just like this article. Stay connected for such more exciting articles coming up. Until then, keep reading!

How Dash is used to create Structured Python Analytics Apps?